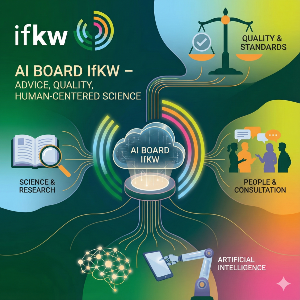

A brief introduction to the AI Board of the IfKW

Artificial intelligence is increasingly shaping research, teaching, and administration—and at the same time raising new ethical, legal, and practical scientific questions. The AI Board of the Department of Media and Communication supports students, teachers, and researchers in using AI responsibly, transparently, and in compliance with regulations. The AIB's recommendations are based on fundamental academic principles such as autonomy, responsibility, transparency, replicability, fairness, and diversity. At the same time, the board takes into account the specific challenges of AI systems – such as possible hallucinations, bias, lack of fact-checking, or data security issues.

The AI Board sees itself as a low-threshold point of contact for all issues relating to the use of AI: from didactic questions and the development of teaching and learning materials to the design of research processes and the clarification of rules for examinations. Enquiries of any kind can be sent to aib@ifkw.lmu.de at any time.

Guidelines on the use of AI for study, research, and teaching

Algorithmic information processing is part of everyday work, learning, and teaching. Teachers use the new technical possibilities for planning their seminars and lectures. This also includes training students in the use of algorithm- and language model-based digital agents, raising their awareness and enabling them to use these tools. That is why students at the IfKW are allowed to use AI for their studies – but only under clear rules that serve to protect scientific integrity.

Landmarks for Orientation